By the end of this series of posts we will have composed some code in Python (roughly 100 lines) that proves the satisfiability of Partition Problem instances with zero knowledge.

Three preliminary notes

- I will assume some basic Computer Science background, as well as familiarity with Python, but not much more.

- I haven't seen this specific protocol in the literature (because I haven't searched for it), but it is a combination of well-known techniques in well-known ways, so I'm quite sure some variation of it is out there.

- For didactic reasons, we'll start with naive and sometimes broken implementations, and improve on them as we go along.

Background

I'm currently working at Starkware, developing some serious zero-knowledge proofs with some brilliant people, based on state-of-the-art research in the field, and we're usually hiring, so drop me a line if you're interested.

This series, however, will deal with much more basic stuff, essentially Computer Science from the 1980s. For those familiar with contemporary protocols such as SNARKs, Bulletproofs, and STARKs - I am not going to present any of them, if you don't know what any of these are - fear not.

What I'm shooting for is less "Cheat sheet for modern ZK proofs" and more "ZK proofs for dummies".

With that in mind - let's get going.

What I'm shooting for is less "Cheat sheet for modern ZK proofs" and more "ZK proofs for dummies".

With that in mind - let's get going.

Zero Knowledge Proofs

Zero Knowledge (AKA ZK) proofs are stories of the following type: side A states a claim and proves it to side B, after some deliberation between them, such that:

- B is sure that the claim is true with 99.99999% certainty.

- B has learnt nothing new in the process, other than that the claim is true.

This Wikipedia article contains an excellent explanation of the idea, with some concrete examples.

In this series I'll deal with ZK arguments of knowledge, which are not exactly the same as proofs, but they're close enough. In short: a ZK proof can be trusted completely, even if the side who's trying to prove their claim (usually referred to as "the prover") has unlimited computational power. ZK argument-of-knowledge can be trusted under the assumption that if the prover indeed tries to cheat, it is polynomialy bounded (if you use credit cards on the internet, then you already assume that, btw).

In the world of ZK proofs, the other side of the exchange is often called "the verifier". I'll stick to this terminology here.

The Partition Problem

Given a sequence of numbers $a_0, a_1, ..., a_{n-1}$, can one partition this sequence into two subsets that sum up to the same number?

If the sequence in question is $1, 9, 8, 0, 2, 2$, then the answer is clearly yes since $2 + 9 = 8 + 1 + 2 + 0$.

However if the sequence is $2, 3, 4, 5, 6, 7$, then the answer is clearly no, since the sum is odd, and therefore there cannot be two subsets summing exactly to half of it each (the numbers are all integers).

While these are simple enough instances, in general this problem is NP-complete (though it has a pseudo-polynomial algorithm).

While these are simple enough instances, in general this problem is NP-complete (though it has a pseudo-polynomial algorithm).

Let's Start Proving!

Suppose we have a python list $l$ of numbers, that defines our Partition Problem instance. We'll say that another list $m$ is a satisfying assignment if:

- len(m) == len(l).

- All of the elements in $m$ are either $1$ or $-1$.

- The dot-product of $l$ and $m$ is 0.

Note that this is equivalent to the statement of the partition problem, if we think of a '1' in $m$ as assigning its corresponding number in $l$ to the left side of the equation, and '-1' as assigning it to the right side.

Given $l$, a proof for its satisfiability can be given by revealing $m$, but that would violate the ZK requirement.

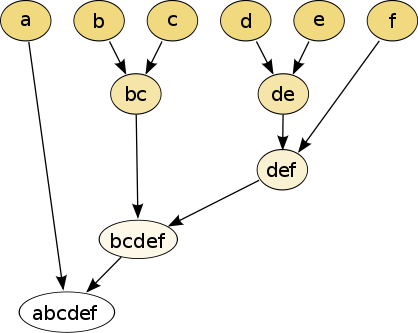

Let's rewrite $l$ as the partial sum list of its dot product with $m$.

Mathematically speaking, let $p_i := \sum _{0\leq k<i} l[k] \cdot m[k]$.

So if $l = [4, 11, 8, 1]$, and $m = [1, -1, 1, -1]$, then $p$ will be one element longer: $p = [0, 4, -7, 1, 0]$.

Note that $p$ now has two interesting properties, if $m$ is indeed a satisfying assignment:

- (property 1 of p) It starts and ends with 0.

- (property 2 of p) For every $0\leq i < n$, we have $|l[i]| = |p[i+1] - p[i]|$.

So here's a first draft for a ZK protocol:

The verifier chooses a random $0 \leq i \leq n$.

If $i = n$, the verifier asks the prover to provide $p[0]$ and $p[n]$ and checks that they are both 0.

Otherwise, the verifier asks the prover to provide $p[i]$ and $p[i+1]$ and checks that indeed $|l[i]| = |p[i+1] - p[i]|$ (recall that $l$ is known to the verifier, as part of the claim made by the prover).

What if the prover is lying???

The above contains an implicit assumption that when the verifier asks the prover to provide some data, the prover will indeed provide it honestly. We don't want to assume that, but we postpone dealing with this issue to the next post. For now, let's assume everything is kosher.

This doesn't prove anything!

An observant reader will probably point out that asking about a single element doesn't mean much. And that's true, we'd like to ask many queries, and after enough of them - we'll be certain that the claim is true. We'll quantify this more accurately in the third (and last) post.

This is not Zero-Knowledge!

Each query reveals something about $m$, and so it is not zero-knowledge. Consequently, after enough queries - $m$ can be completely revealed.

That's terrible! Let's fix it.

Manufacturing Zero-Knowledge

Mathematically speaking, we usually say that something provides no new information, if it appears random, or more precisely - if it is uniformly distributed over some appropriately chosen domain. Without getting into the exact definition, this means that to make something ZK, we mix it with randomness. So here's how we do it here.

- Instead of $m$ as it was given to us, we flip a coin. If it shows heads, we leave $m$ as it is, if it shows tails, we multiply all of $m$'s elements by $-1$. Note that since its elmenets were initially $-1$ and $1$, and its dot product with $l$ was 0, this does not change its dot product with $l$ at all.

- We choose a random number $r$ and add it to all the elements of $p$. This does not effect the second property of $p$, but it changes the first property such that the first and last elements of $p$ now may not be zero. However, they must still be identical to one another.

Now suppose that before each query - we recompute this randomness (i.e. - flip the coin and change $m$, and choose a random number $r$ and add it to the elements of $p$).

If we choose $r$ carefully, then indeed, every two consecutive elements of $p$ will differ (in absolute value) by the corresponding element in $l$ but look otherwise random.

So, here's the first piece of code we'll need, something that takes a problem (i.e. $l$) and a satisfying assignment (i.e. $m$) and constructs a witness (i.e. $p$) that will attest to the satisfiability of the problem instance:

import random

def get_witness(problem, assignment):

"""

Given an instance of a partition problem via a list of numbers (the problem) and a list of

(-1, 1), we say that the assignment satisfies the problem if their dot product is 0.

"""

sum = 0

mx = 0

side_obfuscator = 1 - 2 * random.randint(0, 1)

witness = [sum]

assert len(problem) == len(assignment)

for num, side in zip(problem, assignment):

assert side == 1 or side == -1

sum += side * num * side_obfuscator

witness += [sum]

mx = max(mx, num)

# make sure that it is a satisfying assignment

assert sum == 0

shift = random.randint(0, mx)

witness = [x + shift for x in witness]

return witness